Quo Vadis – or Déjà vu All Over Again, or are metalworking fluid compounders, managers, and end-users once again being thrown to the lions?

The Short Version

A decade ago, the U.S. EPA’s Office of Pesticide Programs (OPP) issued their Reregistration Eligibility Decision (RED) on the most commonly used formaldehyde-condensate microbicide – triazine. In the triazine RED, the EPA limited the maximum permissible active ingredient concentration in end-use diluted metalworking fluids (MWFs) to 500 ppm (0.5 gal triazine per 1,000 gal MWF). Before the 2009 RED was issued the maximum permitted triazine concentration was 1,500 ppm (1.5 gal triazine per 1,000 gal MWF). Triazine is generally ineffective at 500 ppm, so the RED limited triazine use to ineffective concentrations. Now EPA has started along the same path with isothiazolinones – the use of which increased substantially as MWF compounders scrambled to find substitutes for and supplements to triazine. In this post I report about EPA’s isothiazolinone risk assessments and discuss their potential implications. At the end of this article I have provided a call to action. The U.S. EPA’s comment period will close on 10 November 2020. If you want to be able to continue to use isothiazolinones in MWFs, write to the U.S. EPA and let them know of your concerns. If you do not take the time to write now, you will have plenty of opportunity to be frustrated later.

Sordid Background, Act 1

In February 2009, in their RED for triazine (hexahydro-1,3,5-tris(2-hydroxyethyl)-s-triazine), the OPP limited the maximum active ingredient (a.i.) concentration in metalworking fluids (MWF) to 500 ppm1. Triazine is a formaldehyde-condensate. This means is manufactured by reacting formaldehyde with another molecule – in this case, monoethanolamine at a three to one ratio (there are other formaldehyde-condensate microbicides produced by reacting formaldehyde with other organic molecules).

Formaldehyde is a Category 1A (substance known to have carcinogenic potential for humans) carcinogen2. EPA’s decision makers believed – contrary to the actual data – that when added to MWFs, triazine completely dissociated (split apart) to formaldehyde and monoethanolamine. In drawing this conclusion, EPA ignored data showing that in the pH 8 to 9.5 range typical of MWFs, there was no detectable free-formaldehyde in solution. They ignored data from air sampling studies that had been performed at MWF facilities3. They misread a paper that reported that triazine was not effective at concentrations of less than 500 ppm4. Triazine was to have been the first formaldehyde-condensate microbicide RED – to be followed with REDs for oxazolidines and other formaldehyde-condensates. Determining that it was not financially worth their while to develop the additional toxicological data that the U.S. EPA was likely to request, several companies who had been manufacturing formaldehyde-condensate products withdrew their registrations. Consequently, with their decision to reduce the maximum concentration of triazine to 500 ppm, the U.S. EPA effectively eliminated most formaldehyde-condensate biocide use in MWFs. I have discussed the implications of this loss elsewhere5 and will not repeat the tale here.

Sordid Background Act 2

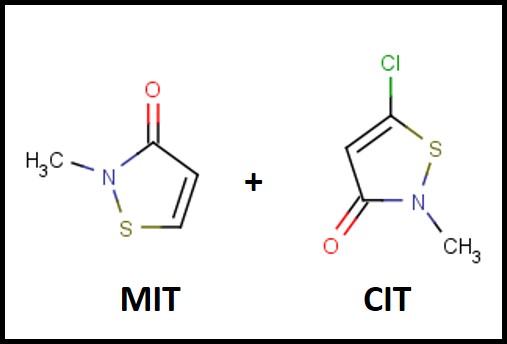

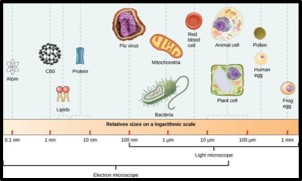

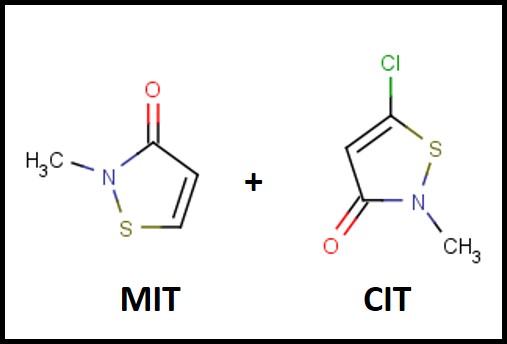

The first isothiazolinone microbicide – a blend of 5-chloro-2-methyl-4-isothiasolin-3-one (CMIT) and 2-methyl-4-isothiazolin-3-one (MIT – I’ll use CIT/MIT to represent the blend) – was introduced into the metalworking industry in the late 1970s (Figure 1). The original manufacturer – Rohm & Haas – knew that the product was a skin sensitizer (caused an allergic action on the skin of susceptible individuals) and took considerable efforts to educate users on how to handle the product safely. Moreover, CIT/MIT had already been in use as a rinse-off, personal product preservative, before it was marketed for use in MWFs. In the past decade, dermatitis complaints from CIT/MIT and MIT preserved personal care product users has received considerable publicity. All FIFRA6 registered pesticides are subject to periodic reviews – including risk assessments (hazard characterizations) and Reregistration Eligibility Decisions (REDs). Various research reports and toxicological studies are reviewed as part of U.S. EPA’s hazard classification process, but there is no indication that the actual incidence of adverse health effects is considered.

![]()

Fig 1. The chemical structures of the MIT and CIT molecules in the first isothiazolinone blend marketed as a microbicide for use in MWFs.

Fig 1. The chemical structures of the MIT and CIT molecules in the first isothiazolinone blend marketed as a microbicide for use in MWFs.The 2020 Hazard Characterization of Isothiazolinones in Support of FIFRA Registration Review7

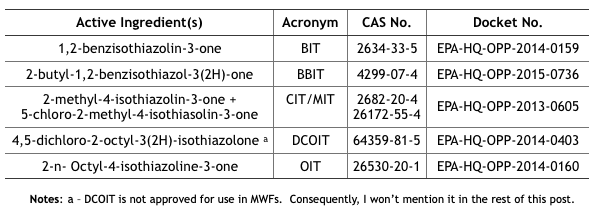

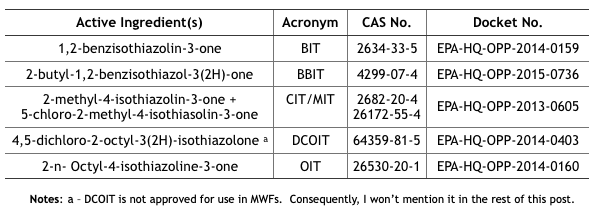

In April and May 2020, the U.S. EPA issued Registration Review Draft Risk Assessments for six isothiazolinones (Its). The CIT/MIT and MIT assessments were provided in one document, thus there were five risk assessment reports plus the hazard characterization. I have listed these in Table 1.

Table 1. U.S. EPA Isothiazolinone Draft Risk Assessments

Note a – DCOIT is not approved for use in MWFs. Consequently, I won’t mention it in the rest of this post.

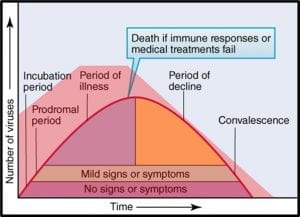

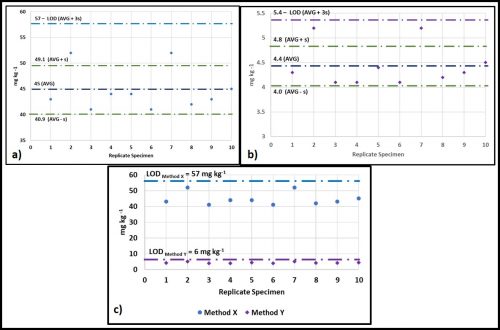

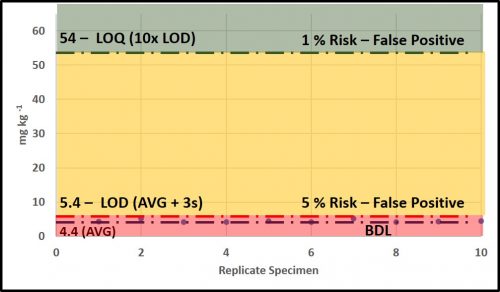

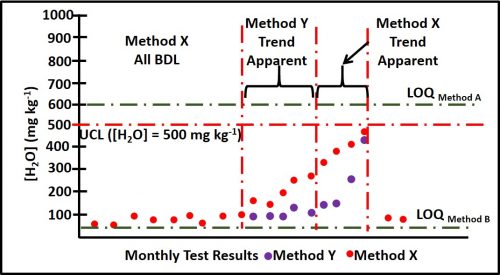

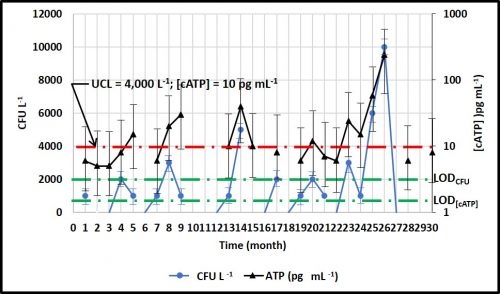

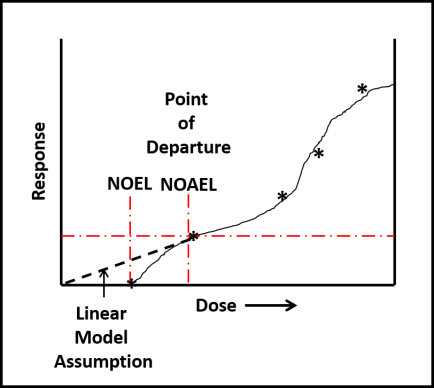

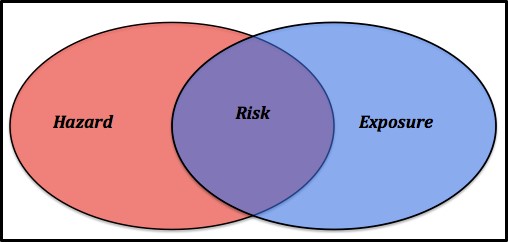

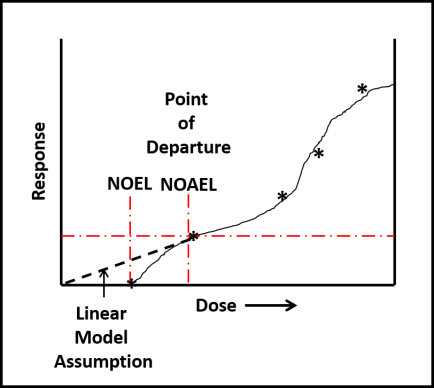

Despite toxicological data to the contrary (have you read this phrase before?), EPA chose to evaluate all ITs together – based on their putatively similar structural and toxicological property similarities. The best news is that none of the IT-microbicides were found to be either carcinogenic or mutagenic. However, as a class, they were designated as Category I (corrosive) for eye irritation and Category I (corrosive) for skin irritation (except for BIT – which was classified as non- irritating – Category IV). Moreover, the risk assessments used results from laboratory studies to identify Points of Departure (POD) for inhalation and dermal health risks. A POD is a point on a substance’s dose-response curve used as a toxicological reference dose (see Figure 2). For the IT risk assessments, the POD was the LOAEL – the lowest observable adverse effect level).

Each risk assessment discussed the different types of exposure relevant to each IT end-use applications and types of users – i.e., residential handlers – adults and children, commercial handlers, machinists, etc. Exposures related to MWF-use were addressed as a separate category. For inhalation and dermal exposures, respectively level of concern (LOC) and margin of exposure (MOE) were considered. The isothiazolinone LOCs were their PODs. The MOE is the ratio of the POD to the expected exposure. If MOE ≤ LOC, it is considered to be of concern. If MOE > LOC, it is not of concern.

Fig 2. Toxicity test dose response curve. LOAEL is the lowest observable adverse effect level. NOEL is the no observable effect level. The linear model assumes that the NOEL is always at test substance concentration = 0. The biological model recognizes that most often NOEL is at a concentration >0. Dose can be a single exposure (for example, 1.0 mg kg-1 of test organism body weight) or repeated exposures (for example 0.1 mg kg-1 d-1). Response depend on what is being observed (skin irritation, lethality, etc.).

Fig 2. Toxicity test dose response curve. LOAEL is the lowest observable adverse effect level. NOEL is the no observable effect level. The linear model assumes that the NOEL is always at test substance concentration = 0. The biological model recognizes that most often NOEL is at a concentration >0. Dose can be a single exposure (for example, 1.0 mg kg-1 of test organism body weight) or repeated exposures (for example 0.1 mg kg-1 d-1). Response depend on what is being observed (skin irritation, lethality, etc.).Isothiazolinone Inhalation MOEs

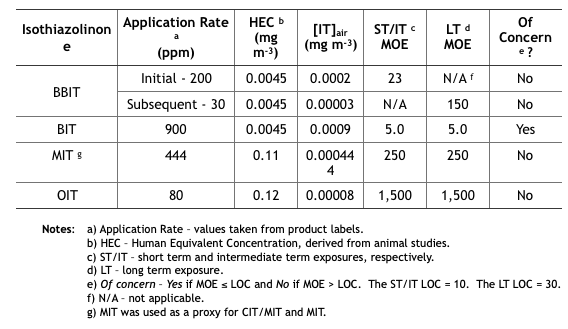

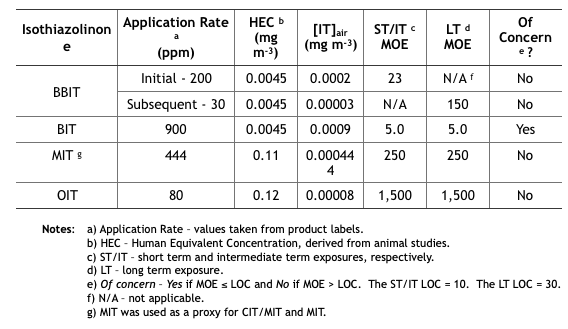

Table 2 summarizes the MWF inhalation MOE determinations from the five isothiazolinone (IT) risk assessments. These determinations are based on unsubstantiated assumptions.

- First, IT concentrations in the air ([IT]air (mg m-3) were estimated based on EPA’s misunderstanding of how microbicides are used in MWF. EPA defined application rate as either initial treatment based on the maximum permissible dose as listed on the product’s label, and subsequent treatments as the minimum effective dose listed on the product label. These categories assume that all IT-microbicides are used only tankside and that subsequent treatments are driven by MWF turnover rates rather than biocide demand8. However, except for CIT/MIT, IT-microbicides are typically formulated into MWFs.

- Next, [IT]air was estimated based on oil mist concentrations that had been measured at MWFs by OSHA during the years (2000 through 2009). During this period OSHA collected 544 air samples and computed the 8h time weighted average (TWA) oil mist concentration to be 1.0 mg m-3. The risk assessments did not provide a reference for the OSHA data, not did they indicate either the range of variability (standard deviation) of mist concentrations measured. Moreover – given that IT-microbicides are water-soluble, but not particularly oil-soluble – EPA’s use of oil mist concentration data was scientifically indefensible.

- Compounding these two misperceptions, EPA calculated inhalation exposures by multiplying the assumed [IT] in the MWF by the average mist concentration. For example, if [MIT] in MWF is 444 ppm (mg IT L-1 MWF), then 444 ppm x 1.0 mg m-3 = 0.000444 mg m-3 (444 ng m-3, where 1 mg = 1,000,000 ng). As illustrated in Table 2, the short term (ST) and intermediate term (IT) inhalation exposure LOC for MIT = 10, and the long term (LT) LOC = 30.

- Each IT’s MOE is computed from its 8h Human Equivalence Concentration (HEC – derived from animal toxicity data) and [IT]air: MOE = 8h HEC ÷ [IT]air (for MIT the HEC = 0.11 mg m-3 and [IT]air = 0.000444 mg m-3, so the MOE for MIT = 0.11 ÷ 0.000444 = 248, which rounds to 250). If you think this risk assessment seems to be based on an unacceptable number of assumptions, you are not alone.

Table 2. Risk Assessment Inhalation MOEs for Exposure to Isothiazolinone-Treated MWFs.

Isothiazolinone Dermal MOEs

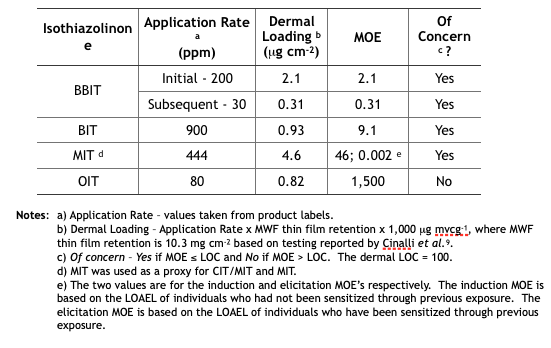

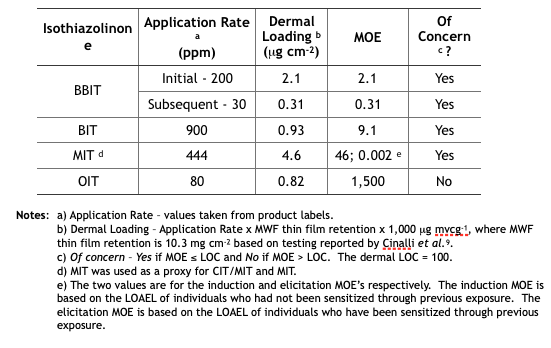

Table 3 summarizes the MWF dermal MOE determinations from the five isothiazolinone (IT) risk assessments. These determinations are based on the same unsubstantiated assumptions used to determine the inhalation MOEs.

Table 3. Risk Assessment Dermal MOEs for Exposure to Isothiazolinone-Treated MWFs.

Implications

Future Reregistration Eligibility Decisions (REDs) – as with triazine, EPA will use the risk assessments as the basis for the respective isothiazolinone (IT) REDs. The agency will most likely restrict end-use concentrations to levels that ensure Margins of Exposure (MOEs) are greater than Levels of Concern (LOCs). The only IT not likely to be affected is OIT. Its inhalation and dermal MOEs are in the not of concern range. In contrast, BIT’s inhalation and dermal MOEs are both of concern. We can anticipate that the EPA’s BIT RED will limit the maximum concentration in end-use diluted MWFs to a level that will ensure that both MOEs are greater than the respective LOCs. We can also anticipate that the maximum permitted BBIT, CIT/MIT, and MIT concentrations in end-use diluted MWFs to be reduced so that the dermal MOE is greater than the dermal LOC. With an elicitation MOE = 0.002 for CIT/MIT and MIT, it is possible that EPA will simply prohibit their use in MWFs.

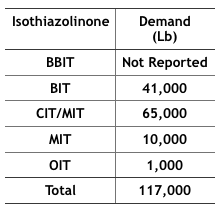

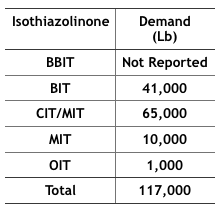

Economics – The 2012 Kline specialty biocides report10 projected that by 2017, 117,000 pounds of IT-microbicides would be used in MWFs in the U.S. (Table 4). In particular, BIT use has increased as a stand-alone microbicide and an active ingredient blended with one or more other active ingredients (for example BIT + triazine, BIT + sodium pyrithione, and BIT + bromo-nitro- propanediol – BNPD). The fate of these formulated microbicides could be affected by the new BIT RED.

Table 4 Projected, 2017, IT-Microbicide Demand for Use in MWFs (from IT product US EPA Risk Assessments).

If effective microbicides cannot be used to protect MWFs against microbial contamination a number of possible scenarios are likely to unfold. In the first, MWF functional life will be severely reduced. Systems that have been running for years without a need for MWF draining, cleaning, and recharging (D, C, & R), are likely to require D, C, & R multiple times per year. This will increase MWF and waste treatment/handling costs. In the second, MWF-compounders will modify their formulations to include molecules that are toxic but do not have pesticide registration. This potentially increased the health risk to machinists and other workers routinely exposed to MWFs. A third would be the increased use of biostable functional additives. The list of biostable functional products has grown substantially over the past two decades and is likely to accelerate as effective microbicide availability continues to shrink. Many currently available synthetic MWFs are quite resistant to microbial contamination. However, they are not suited to all metalworking applications. New applications research, using recently developed functional additives could close this applications gap. A fourth possibility is that MWF-compounders will try to adopt the intentional bioburden model used by one compounder. One MWF product line supports an apparently benign bacterial species whose presence seems to inhibit the growth of potentially damaging (biodeteriogenic) microbes. All of these scenarios translate to increased cost.

Health Issues – On countless previous occasions, I have discussed the potential health issues associated with uncontrolled microbial contamination (for my most recent paper – co-authored with Dr. Peter Küenzi – go to: tandfonline.com. There is some evidence that conventional mist collectors do not do a great job of scrubbing bioaerosols from plant air. MWF bioaerosols are whole cells and cell parts that come from the recirculating fluid and system surfaces. They cause or exacerbate allergenic and toxigenic respiratory disease. If MWF bioburdens cannot be controlled, MWF bioaerosols are likely to pose an increased worker health risk.

Problems with the U.S. EPA’s Isothiazolinone Risk Assessments and Call to Action

As I noted in my synopsis at the beginning of this post, the proposed risk assessment documents are open for comments until 10 November 2020. The U.S. EPA webpage provides instructions for how to submit comments to any or all of the risk assessment documents (dockets in EPA jargon).

Dr. Adrian Krygsman, of Troy has prepared talking points for industry stakeholders. I have previously had Dr. Krygsman’s talking points broadcast to ASTM E34.50 members and STLE metalworking fluids community members. I am copying it below in its original form:

* * *

Specific Comments on Metalworking Fluids:

U.S. EPA DRAFT RISK ASSESSMENTS: BIT-CMIT-OIT

Focal Points

A. General Comments on Approach:

- According to EPA’s assessments there are concerns over the occupational risks associated with MWF’s due to inhalation and dermal exposure concerns. This is based on:

- – Toxicological endpoints chosen for dermal and inhalation risk assessments (occupational and residential) are ultra conservative due to:

- – Although there are separate databases for each IT EPA considers their overall response to be similar (corrosivity/irritation in sub-chronic studies) allowing them to interchange the most sensitive tox. Endpoints as needed per each individual assessment.

- – Use of tox. Endpoints from other IT’s (e.g.- use of DCOIT inhalation threshold) for BIT.

- – EPA uses a model to address spray mist levels of IT’s in air due to short term/intermediate term exposure.

- – EPA addresses dermal exposure using their reliance on in-vitro/in chemico studies on IT’s. This approach, first validated in the EU for cosmetic products, using acute neural network approach and Repeated Open Insult Tests (ROAT) to set dermal thresholds for elicitation (that concentration which causes a skin reaction) and induction (period of time needed to induce a dermal allergic reaction). EPA is using an approach typically used for cosmetic products to create new thresholds for dermal exposure. Using this approach, no IT will pass their ultra conservative dermal exposure approach.

- – EPA uses a dermal immersion model to conduct specific assessments for metalworking fluids.

B. Specific Comments

- EPA has used maximum use rates in all of their assessments. Rates need to be checked.

- EPA is misleading especially for CMIT/MIT by indicating 39 publicized adverse incidents. How many incidents of dermal rash or irritation are seen in the MWF industry?

- Are the models EPA is using for MWF’s appropriate (e.g. dermal immersion model)?

- EPA’s use of toxicological endpoints from other IT’s interchangeably. For example why choose DCOIT inhalation tox. Data for BIT? DCOIT is not a suitable surrogate for BIT. It is highly chlorinated versus BIT.

- The IT Task Force is submitting human data to address EPA’s use of their non-animal data. Due to EPA’s reliance on this data they are obligated to account for intraspecies and interspecies differences (10X safety factors) resulting in a Margin of Exposure of 100X. Coupled with the low values obtained from their non-animal dermal studies it will be impossible to address dermal exposure effects, unless EPA validates this EU exposure approach before a scientific advisory panel (SAP). EPA considers their approach validated because experts in the EU have reviewed the approach and data. It has not been validated here.

- The industry can not allow an assessment approach used for “leave-on cosmetics” to be used for regulation of industrial chemicals.

- If PPE are incorporated into EPA’s assessments typical uses such as in-preservation of MWF’s still do not pass EPA’s dermal assessment. This is counter intuitive.

- For CMIT/MIT EPA interchanges use rates of MIT at 400 ppm against CMIT/MIT values of 135 ppm.

C. Conclusion:

- Major concerns have been raised for inhalation and dermal exposure from exposure to MWF’s. All IT’s assessed are problematic for these two routes of exposure. This is a function of EPA’s approach to group IT’s together and utilize an approach for dermal exposure which has never been used before.

- The IT task force is combatting EPA’s approach for dermal assessment by submitting human sensitization data. This is the only way to show EPA this approach is wrong.

- There are no concerns for environmental fate or ecotox. Effects.

Questions/Contact: Adrian Krygsman, Director, Product Registration

Troy Corporation

Email: Krygsmaa@Troycorp.com

Phone: 973-443-4200, X2249

* * *Please send your comments and questions about this blog post to me at: fredp@biodeterioration-contol.com.

1 archive.epa.gov

2 IARC Monographs on the Evaluation of Carcinogenic Risks to Humans Volume 88 (2006), Formaldehyde, 2-Butoxyethanol and 1-tert-Butoxypropan-2-ol. https://publications.iarc.fr/106

3 Cohen, H.J. (1995), “A Study of Formaldehyde Exposures from Metalworking Fluid Operations using Hexahydro-1,3,5-Tris (2-hydroxyethyl)-S-Triazine,” In. J.B. D’Arcy, Ed., Proceedings of the Industrial Metalworking Environment: Assessment and Control. American Automobile Manufacturer’s Association, Dearborn, Mich., pp. 178-183.

4 Markku Linnainmaa, M., Hannu Kiviranta, H., Laitinen, J., and Laitinen, S. (2003), Control of Workers’ Exposure to Airborne Endotoxins and Formaldehyde During the Use of Metalworking Fluids, AIHA Journal 64:496–500.

5 Passman, F. J., (2010), Current Trends in MWF Microbicides. Tribol. Lub. Technol., 66(5): 31-38.

6 FIFRA – Federal Insecticide, Fungicide, and Rodenticide Act, 7 U.S.C. §136 et seq. (1996). https://www.epa.gov/laws-regulations/summary-federal-insecticide-fungicide-and-rodenticide-act

7 U.S. EPA (2020) Hazard Characterization of Isothiazolinones in Support of FIFRA Registration Review. https://beta.regulations.gov/document/EPA-HQ-OPP-2013-0605-0051

8 Biocide demand is the sum of all factors that decrease a microbicide’s concentration in a treated MWF. These factors include the microbes to be killed, chemical reactions with other molecules present in MWFs, evaporation (for volatile microbicide molecules), transport in MWF mist particles, drag-out, and dilution.

9 Cinalli, C., Carter, C., Clark, A., and Dixon, D. (1992), A Laboratory Method to Determine the Retention of Liquids on the Surface of Hands, EPA 747-R-92-003. https://nepis.epa.gov/Exe/ZyPDF.cgi/P1009PYK.PDF?Dockey=P1009PYK.PDF

10 Kline report: “Specialty Biocides: Regional Market Analysis 2012- United States” published April 3, 2013.